Sherman set the way-back machine to Silicon Valley 1979, as soon as we

arrive we find chip makers making all sorts of

CPUs,(Central Processing Units)

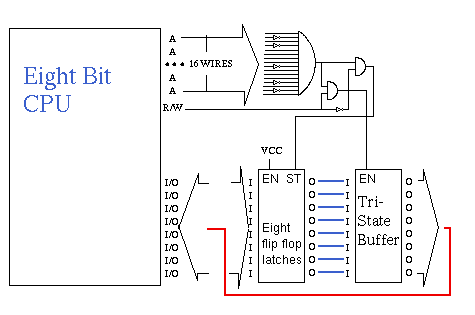

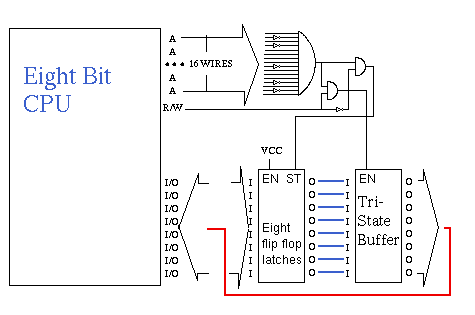

most of them were limited to eight bit parallel data paths as shown in the

figure above. Even if they had multiple registers internally, that could

dish out eight bits at a time, four times in a row, to form a thirty two bit

wide data "word" that eight bit bottle neck still restricted the data speed

at any given clock speed, all other things being equal. As we look at these

companies, they are engauged in some of the fiercest competition on the

planet, they try to get trade magazines to say good things about their

product, and speed sells, big time. If one company's CPU can perform better

than another, they are assured the lions share of the market. In the drawing

above, a specific address is detected when certain address wires of the

sixteen bit address bus are low, and others are high, the wires that go

through an inverter before reaching the AND gate, must leave the CPU low, or

a binary zero, the ones that go straight from the CPU to the AND gate must

leave the CPU high, or a binary/logic one. When all sixteen wires coming

into the AND gate are a logic one, the AND gate turns on some of the other

circuitry, depending on whither it is a read, or a write, determines

whither the TriState Buffer carries its data payload to the eight data

lines of the CPU, or the CPU in the case of a write operation, sets or

clears the individual flip-flops in the eight bit latch. If you connect

the eight blue wires together as shown, you have what amounts to a

RAM,(Random Access Memory) cell, break them apart however, and

they serve as an I/O port, enabling the CPU to send out eight on/off signals

on a write operation, and sense the state of eight input wires on a read

operation of that I/O port, by simply reading that address. And that was

the way it was until, someone managed to reliably produce lead frames to

allow more than the standard forty wires on a chip. It was for this reason

that all those companies made eight bit computers. OK I'll break it down.

Eight wires for the eight data lines, plus sixteen wires for the address

bus, that's 24 so far, then two for power, and ground, that's 26, Read/Write

and strobe, 28, Crystal clock, 30, Reset, Interrupt Request, and nonmaskable

interrupt 33, Memory TriState and Data Acknowledge, to allow DMA, or other

CPUs to run between clock pulses, 35 a thing called the "M" line, or on

other models Phase Zero, 36, Single step instruction, 37, and we've only got

three more wires on a forty pin lead frame. INTeL corporation did a neat

trick, they multiplexed half the sixteen bit address buss, with the data

buss, still only eight bits, but it freed up some wires so that four

additional address lines could extend the address range from 64 KiB to

sixteen times that much, one megabyte. It was this CPU, the INTeL i8088

that IBM chose for its PC,(The IBM Personal Computer), oddly

enough Motorola had just introduced the 68000 CPU, on their own 64 pin lead

frame. So why did IBM go with the INTeL part? Well for one thing Motorola

at that time hadn't worked all the bugs out of their processor, and just

because you can build a 64 pin lead frame, doesn't mean there is tooling to

solder such a part into a board reliably, or robot insertion tools to place

the parts on the circuit board, and at that time human manual soldering was

considered so unreliable that unit yeild would be cut to unacceptable levels.

Strangely a mere three years later, the Motorola 68000 based Apple Macintosh

was introduced, and INTeL responded with the i80286 CPU, and so the race was

on. The i80286 or just 286 for short, had a mode that made it a true sixteen

bit CPU, but when it started up, it had the same exact instruction set, as

the i8088, including the 20 bit wide address bus limitation. Additionally

once you switch to 286 protected mode, there was no instruction to switch

it back to native mode. IBM began making 286 machines, named the

PC-AT,(Personal Computer Advanced Technology) since the CPU

came up in Native mode, it looked like a fast i8086, so software written for

the PC, would run on the new PC-AT. You might think, as I'm sure INTeL and

IBM did, that soon people would write protected mode software, but as soon

as you switch on protected mode, none of your old software would work

anymore. Remember, the BIOS in ROM on the motherboard, was i8086 code, so

you lost all your BIOS calls, well that could have been dealt with, but wait,

there is this thing called extended BIOS, nearly every card that plugs into

the motherboards backplane slots, had the vendors i8086 instruction code

based ROMs onboard, and remember, once you turn on protected mode, there's

no way to turn it off... Or so everybody thought... Enter Micro$oft.

They had to get hold of the extended addressing, that a full 24 bits of

address could offer, sixteen megabytes! The only way to do that was to turn

on protected mode, and then to use all of their software base, turn it back

off again. It turns out, that the serial keyboard the IBM used, had an

eight bit CPU dedicated to handling the keyboard socketed right there on the

motherboard. That dedicated keyboard CPU could reset the main 286 CPU, so

what they did, was to place a special pattern in memory, and then program

the keyboard handler CPU to reset the main 286 CPU, which on a cold power up

that distinct pattern would not likely be in memory, so if it was, the 286

upon being reset, first looked at this special place in memory for that

pattern, if it was there, it did not re-boot the machine, instead, it popped

the stack, and executed a return from interrupt, and continued on with

what ever job was being done in protected mode. As it turned out, the only

thing that was ever done, was memory management, and memory extenders, no

one ever used protected mode for application software. Fast forward.

Next thing you know, the i386 comes out, and it has an instruction to

switch back to native mode. But by now, so much code has been written for

native mode, and next to none for protected mode, that market forces drove

protected mode programming out the proverbial Window. At some point

hardware makers demanded assurance from Micro$oft that they wouldn't have

to dual code their extended BIOSes, and so we have the protected mode

Ice Age in full bloom. At about this time I saw a ray tracer

written in i386 protected mode to get every last ounce of speed

possible, I can tell you it would have been well worth the effort

to write protected mode code, but the inertia was too great. Then there was

the i486, and then the Pentium. Unknown to me at the time Linux was

starting to evolve. We are now up to multigigahertz machines, that use

processor chips that are nothing like INTeL, but they emulate, and boot up

in i8086 native mode, it has become a grandfathered in standard, only now

are they beginning to talk about booting into protected mode, and then only

because the Trusted Computing Initiative requires it.

INTeL originally had it right, once in protected mode you don't want

the CPU to be able to switch back to native mode, because native mode

has no mechanism to supervise the usage of memory. In protected mode

a fence can be erected around an island of memory such that any attempt

by a program running in that island to read, or write, memory outside the

confines of that island pulls a memory bounds exception interrupt, to which

the Kernel answers, and determines what, if any, action to take. Usually

what happens under Linux, is the program itself is terminated, and all of

the memory owned by that program, the CPU registers, the stack, a kind of

history of where to return to when a subroutine is called, are written as

data into the user's area, at the PWD,(Present Working Directory)

as of the time the program was started, into a file called simply core,

and the memory allocated for the program is zeroed and returned to the pool

of main memory for other programs to use. These "core" files are useful

they can be fed to a program debugger to identify what went wrong in the

coding sequence that comprise the program. Most users have no knowledge

of this because "core file size" has been set to a default of

zero by the distro house. If you want to see your core files use the

ulimit command, an internal bash function, to set "core file size"

to some more reasonable value, like say one megabyte for a nice round

number. I have used core files to recover data from a crashed program

so they are useful even if you're not developing programs. Protected mode

also offers system crash resistance, if your Kernel is nearly bug free, as

Kernels tend to get to be as they get several years of development under

their belt, it is almost impossible for a poorly written program running in

user space to crash the Kernel. Note: Misconfiguration of a superuser

program, like say for instance you map the graphics screen memory of an

X-server smack in the middle of the active running Kernel's memory, it's

bound to crash the system as soon as X fires up. But if the

system is correctly configured, the hardware it runs on is solid, and all

the important bugs have been wrung out of the core system itself,

user space can not crash the system without a lot of very deliberate

and intentional work to exploit some obscure weakness in this refined

Kernel. In other words, a bug in userspace code ain't gonna' crash

the system. Such a system needs a lot of attention to detail, as to what

is user space, and what is system daemon space, if for some reason a

system deamon is shutdown, the kernel needs to restore its functionality.

I once had a firewall, that had an intermittant memory problem, the

networking would partially go away, and then come back again,

when I got curious enough to turn on the VGA monitor, I saw

error messages that had detected something wrong with some networking

daemons, stopped them, tried to restart them, went Yikes your memory seems

bad at address blah, Fixing, restarting blah, and that was the last error

message, and the firewall was once again working.

Now contrast this with the mindset Micro$oft had, where you run in an

environment that allows protected mode to be turned off, that is you

allow a program to switch back to legacy native mode, eg. i8086 instruction

set. The reason of course, was that BIOS calls, and the huge code base

Micro$oft had developed, was all i8086 based. A virus has a pretty rough

go of it in a protected mode environment, but if all the virus has to do,

is wait for a BIOS call, or initiate one itself, it can easily do its

dirty work, without any supervision. Once that dirty work is done, it,

the virus, now has privilege status under protected mode, and everything

is now possible. I'd hate to think what would happen if someone were to

realize this, and hold Micro$oft responsible for all those billions of

dollars lost to virus riddled E-mail. I'm not saying that properly setting

up a protected mode environment would have done away with viruses, but it

would have made them a good deal more difficult to write, and there would

have been far fewer of them, so much so, that they would be as rare as the

nearly mythical Linux virus is today.

As a programmer, working in DOS, circa late 1980's writing 'C' and

assembly language I spent some time writing 6502 microprocessor code to

talk to the Arpanet, the predecessor to the Internet, via a telnet server,

and those guys, and gals, mostly used Unix. I was bemoaning the

tendancy of DOS to crash for the least little thing, an uninitialized, or

miss-computed pointer somewhere, and BOOM the whole system goes down, and

the person I was talking to, yes the chat program under Unix is

called "talk", was telling me how you just didn't have that sort of

nonsense under Unix, that the Kernel supervises all goings on, and shuts

down anything that violates memory rights before it has a chance to

interfere with the running Kernel. Now that I am running Linux

a varient of Unix, I can tell you it is pure bliss, compared

to DOS, I've had work station computers up running for over a year, on a

UPS,(Uninterruptible Power Source), burning CD's writing and

testing programs, the works, and it only went down, because I shut it

down to plug new cards into the backplane slots! Had Micro$oft, and

IBM, listened to INTeL, and taken protected mode seriously, things in

the '90s would have been much different. Windows XP seems to be a breed

apart, but the separation of privilege, userspace, versus system daemon

space, is only now being addressed, it wasn't in the design spec from the

beginning, a lot of code, 50 million lines of it, won't be rewritten

over night, unless they write an Artificial Inteligence program to rewrite

all of it, it may not ever get done, the job is that big. IBM froze OS360

when at 30 million lines they labeled it as unmaintainable. Everytime

they tried to fix something, something else would break. When a software

base gets to a certain size, human beings cannot wrap their minds around

the whole of it to manage it. Do you remember a movie called

The Forbin Project an artificial intelligence when they switched

it on, deduced that there was another system like it in the Cold War

Soviet Union, and demanded to be connected to the other system.

The people obliged, and the two computers began talking in an optimized

computer language of their own design, one that human beings could not

understand, if we devise an artificial intelligence to solve the Micro$oft

mess, will we be able to read the code? Will micro$oft's example of closed

source, and secrecy, being a virtue, serve as an example to the artificial

intelligence as to how to conduct business? I do not believe that

Micro$oft, Bill, and crowd, would like to live in the world we all have to

endure, due to their policies, yet that may very well be where we are

headed. Reap what you sew.